Exploring the Power of Computer Graphics with Babylon js

- Johann H. Armenteros

- Nov 2, 2025

- 6 min read

Computer graphics on the web turns math into pixels. This article is a quick tour of the fundamentals and how they map to Babylon.js. We’ll cover coordinate spaces and cameras; meshes, materials, and lighting; depth, transparency, textures, and sampling; shaders and rasterization. You’ll see the Babylon building blocks—Scene, Camera, Mesh, Material, Light—and how the engine targets WebGL2 or WebGPU.

What is Babylon.Js

Babylon.js is a modern, open-source 3D engine for the web. It lets you build interactive scenes with cameras, meshes, materials, lights, physics and post-effects, rendering via WebGL2 or WebGPU. It includes tools like the Inspector and Node Material Editor, loads formats like glTF/glb, and Physics.

In a nutshell, this is a layer-by-layer mental model that describe the relationship between Babylon and the rest of the aspects in a computer system.

Application (your code) — TS/JS app logic, UI, asset loading, and the game loop calling engine.runRenderLoop() (or requestAnimationFrame) to update scenes and issue draws via BabylonJS.

Browser — Provides the JavaScript engine and Canvas context, handles scheduling, memory, security sandboxing, and exposes the WebGL/WebGPU interfaces to JS. It also runs a GPU process under the hood.

BabylonJS (engine & scene graph) — Abstracts GPU details with high-level constructs: Scene, Camera, Mesh, Material, Light, post-process pipelines, loaders, and inspectors. It builds shaders, uploads buffers/textures, sorts draw calls, and targets WebGL or WebGPU depending on support.

Graphics API (WebGPU | WebGL)

WebGPU: modern, explicit API (command buffers, pipelines, bind groups, compute).

WebGL: state-machine wrapper over OpenGL ES 3.0; no native compute, extensions for advanced features.Both compile shaders, manage GPU resources, and submit draw calls.

GPU/Driver (behind the scenes) — Browser translates API calls to native backends (Metal/D3D/Vulkan); the GPU executes shaders, rasterizes, and writes frames.

Meshes

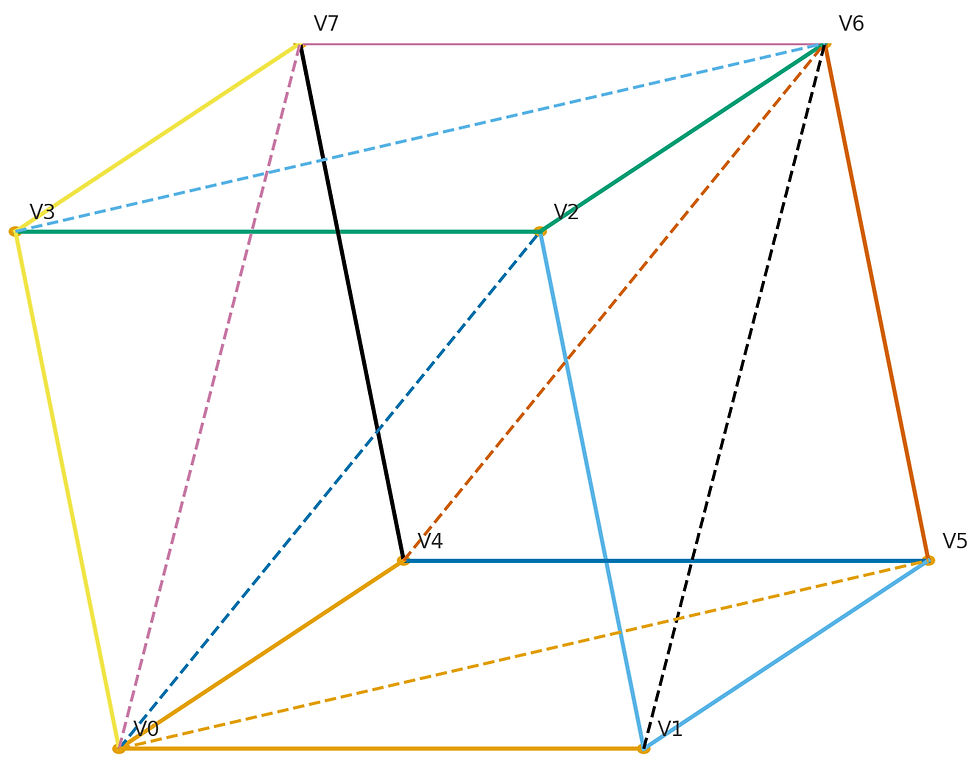

A mesh is the geometric container the GPU draws: a set of vertices plus (usually) an index buffer that connects them into triangles, lines, or points.Vertices are points with attributes—position (required), normal, tangent, UVs, color, skin weights, etc. The index buffer reuses vertices to build faces efficiently.

In the pipeline, the vertex shader runs per vertex, transforms positions through matrices, and passes attributes to the fragment stage. Shared vertices with averaged normals give smooth shading; duplicating vertices with different normals/UVs creates hard edges or seams.

In Babylon.js, a Mesh wraps these GPU buffers; you provide attributes via VertexData/VertexBuffer and indices, or load them from formats like glTF.

For example, when Babylon.js Engine takes the following code

const mesh = BABYLON.MeshBuilder.CreateBox ("box", {}, scene);It create a virtual 3d object that looks like this:

Materials

A material defines how a mesh’s surface looks and reacts to light. The mesh supplies geometry (vertices, indices, UVs, normals). The material provides shader programs and parameters—colors, metalness/roughness, normal maps, emissive, transparency—that the GPU uses to shade pixels. Changing the material changes appearance without altering geometry. Multiple meshes can share one material; a mesh can also use multiple materials via submeshes.

In Babylon, Material is the base class. Common implementations are StandardMaterial (classic lighting) and PBRMaterial (physically based).

An example of how to create a Material is:

const myMaterial = new BABYLON.StandardMaterial("myMaterial", scene);and it can be assigned to a mesh doing this:

mesh.material = myMaterial;Materials respond to light in four classic ways:

Diffuse — the base color/texture you see under regular lighting.

Specular — the shiny highlight a light paints on the surface.

Emissive — the color/texture as if the object were glowing by itself.

Ambient — a gentle tint from the scene’s overall background light.

Notes: Diffuse and Specular need at least one light in the scene. Ambient shows only if the scene’s ambient light/color is set.

Lights

Light is how energy reaches your scene—its direction, color, and intensity decide what pops and what hides. Range and falloff control how quickly that energy fades with distance, while shadows add the cues our eyes use for depth. For physically based looks, image-based lighting (IBL) from an environment map supplies believable ambient light and reflections.

In Babylon.js you mix lights like a stage crew sets a scene: HemisphericLight is a gentle sky-and-ground wash, the ambient glow that fills everything; DirectionalLight behaves like the sun, sending broad, parallel beams that give an outdoor vibe; PointLight is your classic light bulb, shining in every direction from a single spot; and SpotLight works like a theater reflector or flashlight—a steerable cone you can point to highlight key subjects. Adjust each light’s color and intensity to shape mood and guide the reader’s eye across your scene.

The previous images illustrate how to light the same scene using the a Directional Light and a Point Light. In the first image the direction vector of the Directional Light is BABYLON.Vector3(0, -1, 0) and in the second example the position Vector(because a Point Light only needs a position, like a light bulb) is BABYLON.Vector3(0, 1, 0). As you can see some in the first image, some areas of the box are dark, because the light travel vertically from top to bottom in the Directional Light, ignoring any side of the box is parallel to the direction of the light.

And example of how to create a DirectionalLight in babylon is like this:

const light = new BABYLON.DirectionalLight("DirectionalLight", new BABYLON.Vector3(0, -1, 0), scene);in the above snippet, BABYLON.Vector3(0, -1, 0) represent the direction of the light over the scene.

Shader

Imagine your 3D scene as a stage play in the browser. Your JavaScript is the script; Babylon.js is the director that builds the set (meshes), places lights, and positions the camera. But who actually paints what you see on the screen? That’s the job of shaders—tiny programs that run on the graphics chip (GPU).

Babylon takes your scene and compiles two main kinds of shader “artists”:

Vertex shader: works point-by-point, deciding where each corner of your models appears on screen (moving from 3D space to 2D view) and passing along handy notes like color, texture coordinates, and surface direction.

Fragment (pixel) shader: paints each visible pixel, mixing colors, textures, and lighting to produce the final look.

Every frame (often up to 60 per second), Babylon updates the set if something moved, regenerates or reuses the shader instructions as needed, and asks the GPU to run them. A simple line like:

const box = BABYLON.MeshBuilder.CreateBox("box", {}, scene);

ultimately becomes data and shader code that the GPU executes to draw your box—fast.

Camera

A camera is your scene’s viewpoint: it defines where you look from and how 3D is projected to the screen (via a viewing frustum with field-of-view plus near/far planes). In short, the camera’s view and projection transform the world into pixels.

Babylon.js offers several ready-made cameras for common workflows. UniversalCamera is the default “FPS-style” camera with keyboard/mouse/touch/gamepad input; ArcRotateCamera orbits a target (great for model viewers); FollowCamera tracks a moving object; and WebXRCamera connects your scene to XR devices. You typically call camera.attachControl(canvas) to wire user input.

Here is an example of how to initialize an ArcRotateCamera in Babylon:

var camera = new BABYLON.ArcRotateCamera("Camera", -Math.PI / 2, Math.PI / 2, 5, BABYLON.Vector3.Zero(), scene);How they work in practice: the engine updates the camera’s position/orientation, builds the view/projection matrices (based on your FOV/aspect/near/far), then culls anything outside the frustum and renders what’s visible. Switching camera types changes the control scheme and motion model—not the underlying math.

Up next, we’ll unpack the camera’s “mathy” side—viewport, projection matrix, and the viewing frustum (with field-of-view plus near/far planes)—so you can see how a 3D view becomes pixels on your screen.

Scene

A scene in computer graphics is the container for everything that can be drawn—objects, their transforms, cameras, lights, and related state—often organized as a scene graph, a hierarchy where parent transforms affect their children and rendering walks this structure to draw what’s visible.

In Babylon.js, a Scene is that staging area: you add meshes, cameras, and lights to it, and the engine renders the result each frame. It’s the place where you also configure environment textures, post-effects, and performance features; practically, you can’t render without creating a scene first.

Babylon exposes a full scene graph (nodes for meshes, lights, cameras, sprites, layers, animations, and more), letting you parent objects, group behaviors, and manage complex worlds cleanly.

We’ll dive deeper next—covering nodes vs. transforms, frustum culling, render order, and scene lifecycle—so you can structure large scenes that stay readable and fast.

Conclusions

After this tour, each line in the classic Babylon.js setup should make sense:

var createScene = function () {

// initialize the scene

var scene = new BABYLON.Scene(engine);

// camera creation

var camera = new BABYLON.ArcRotateCamera("Camera", -Math.PI / 3, Math.PI / 4, 5, BABYLON.Vector3.Zero(), scene);

camera.attachControl(canvas, true);

// light creation

var light = new BABYLON.PointLight("light", new BABYLON.Vector3(0, 1, 0), scene);

// material creation

const sphereMaterial = new BABYLON.StandardMaterial("myMaterial", scene);

// mesh and shader creation

var sphere = BABYLON.MeshBuilder.CreateSphere("sphere", {}, scene);

sphere.material = sphereMaterial

return scene;

};The scene is your stage, the camera defines how you look at it, the light makes objects visible, and the mesh is the geometry you draw. Together, they form the minimal loop every sandbox uses—simple parts that connect to the bigger ideas we covered (coordinate spaces, materials, shaders, and the render pipeline). Drop the snippet in, tweak a value or two, and you’ll see the theory come alive on screen—ready for deeper dives in the next articles.

Comments